When I upgraded my Synology NAS back in 2023, I just swapped the existing 8TB drives into the new enclosure. After my recent network outage during Hurricane Milton, I found a warning on the storage pool indicating there were file system errors and while running some file system checks I realized that the volume was still ext4 instead of the newer (recommended) btrfs file system (because my previous NAS that the drives came from didn’t support it). That sent me down a research rabbit hole on how to “convert” the ext4 volume to btrfs. Short answer … well, there was no short answer.

The supported Synology way was of course your typical “back up everything, delete the ext4 volume, create the btrfs volume, restore your backup.” That would require a good chunk of downtime waiting for 4TB of data to be restored that I really didn’t want to bother with (since I run a few Docker containers on the NAS, one of which hosts the MySQL databases for this blog and my photo sites). That requirement led me to seek out some other articles (like this one and this one) with and alternative method: since I have two drives in a RAID configuration, eject one drive from the existing ext4 volume, re-format and create a new btrfs volume on it, then manually copy everything from the ext4 volume to the btrfs volume. Presumably this would be faster since the copy would be internal drive to internal drive instead of external USB drive to internal drive.

I did do a backup, though … you know, just in case! Using a spare 6TB drive in a new USB3.0 enclosure, I used Hyper Backup to make a full backup, which took about 31 hours plus another few afterwards to catch up the incrementals. With that complete, it was time to tinker (and break things!).

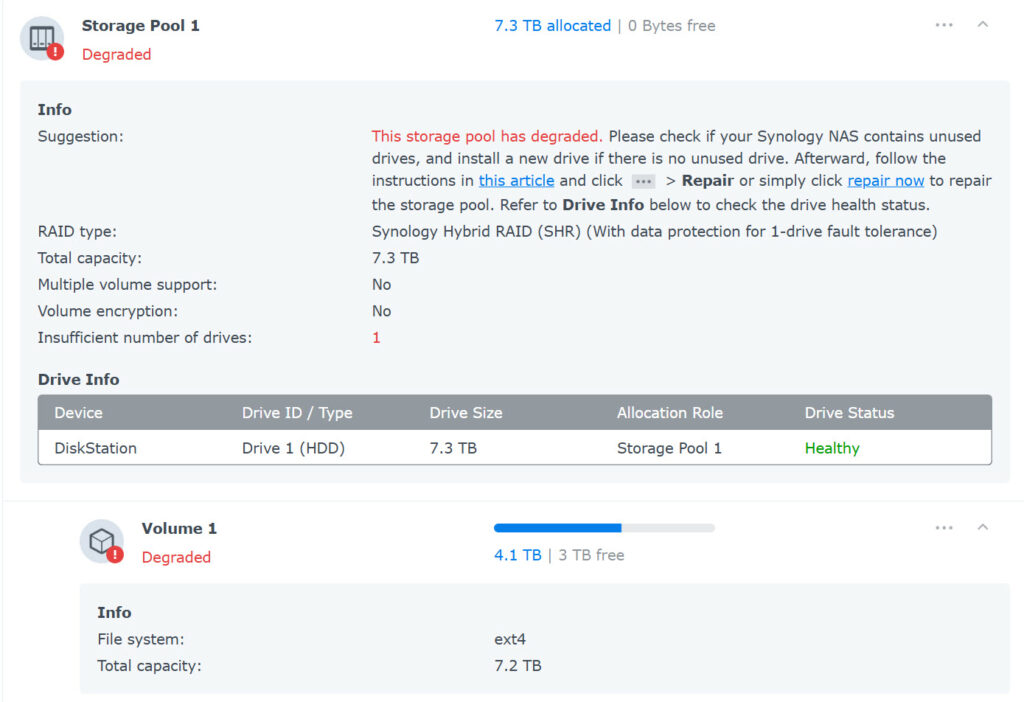

First I deactivated Drive 2 and removed it from the NAS. As expected, this put the RAID volume in a degraded state, but the NAS continued to run normally.

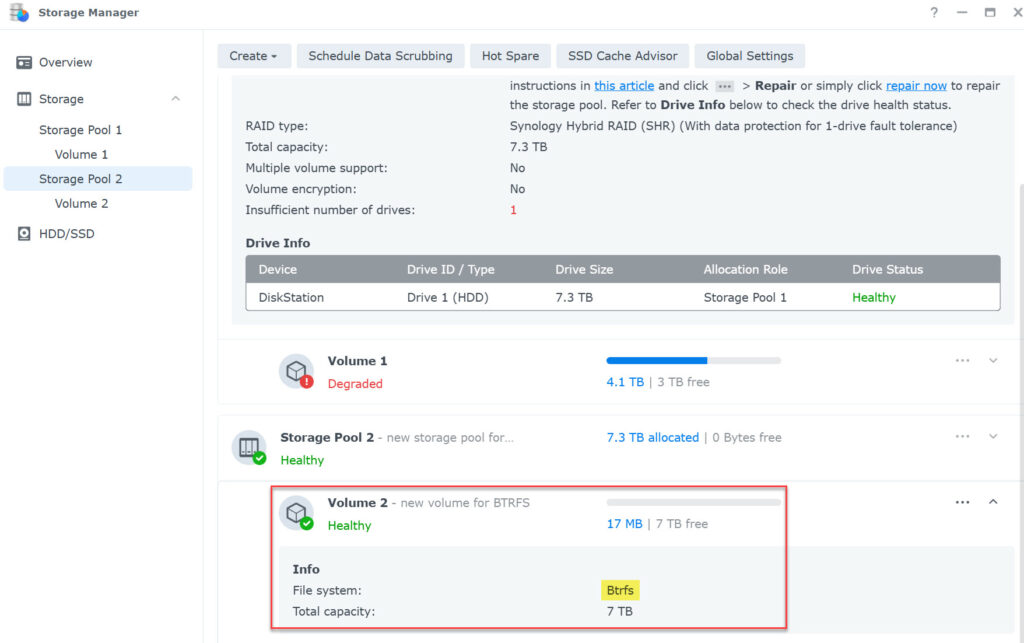

I then attached Drive 2 to my laptop, formatted it and re-inserted it into the Synology. In Storage Manager, I created a new storage pool using just Drive 2 and created a new btrfs volume on it (behind the scenes, in the Linux file system, this resulted in a /volume2 folder).

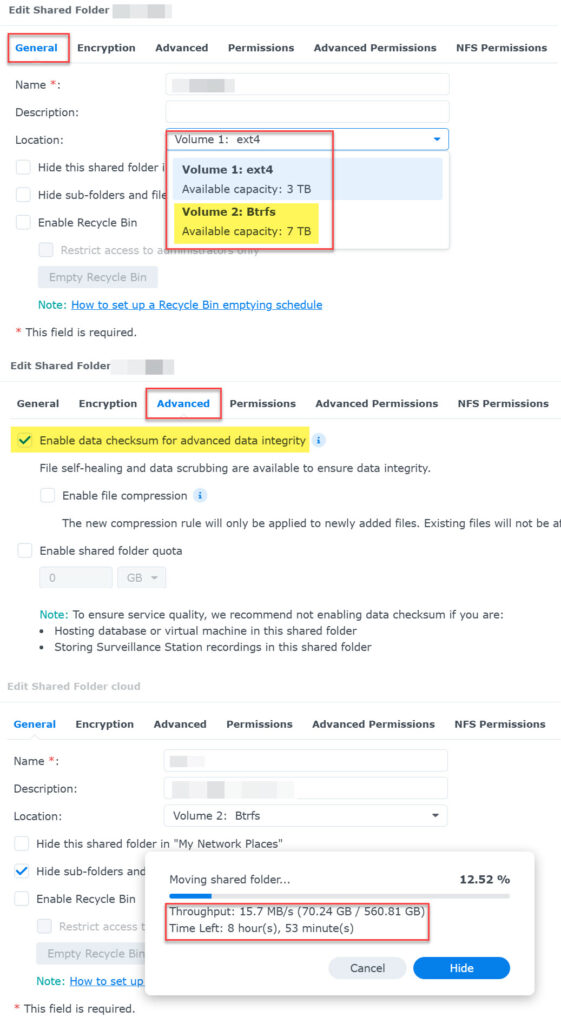

Now it was time to move the shared folders from the ext4 volume to the new btrfs volume. You can’t (or shouldn’t) just do the copy from the Linux command-line. Instead, using the Shared Folder control panel, you change the location of the shared folder from the old volume to the new one and then DSM handles the copy. This is also where you can turn on the “enable data checksum for advanced data integrity” feature of btrfs (which wouldn’t happen if you just manually copied the files, apparently).

The copy will run in the background, but there’s a noticeable performance hit since both drives are “busy” (I mistakenly kicked off two shared folder moves at the same time and throughput really suffered, so I recommend sticking to one at a time). It took about 8 hours to transfer the 4.2TB of shared folders to the new volume (much better than the 31 hour backup time!).

That’s the data on the NAS. The installed packages (like Docker, Plex, etc.) also need to be moved to the new volume. Synology’s method involves uninstalling each app and re-installing it, picking the new btrfs volume as the install target, and re-configuring everything. But why do that when someone else has a better solution (other than the problems I ended up having below)? I found the Synology App Mover on Github after searching through some forum posts about the same topic. This script lets you back up the settings for each installed app in DSM and then moves the apps between volumes. I still had to do some manual work, like updating the paths to my media libraries in Plex or in my various scheduled task scripts (to account for the change from /volume1 to /volume2) but for 90% of my installed apps, the mover script worked perfectly.

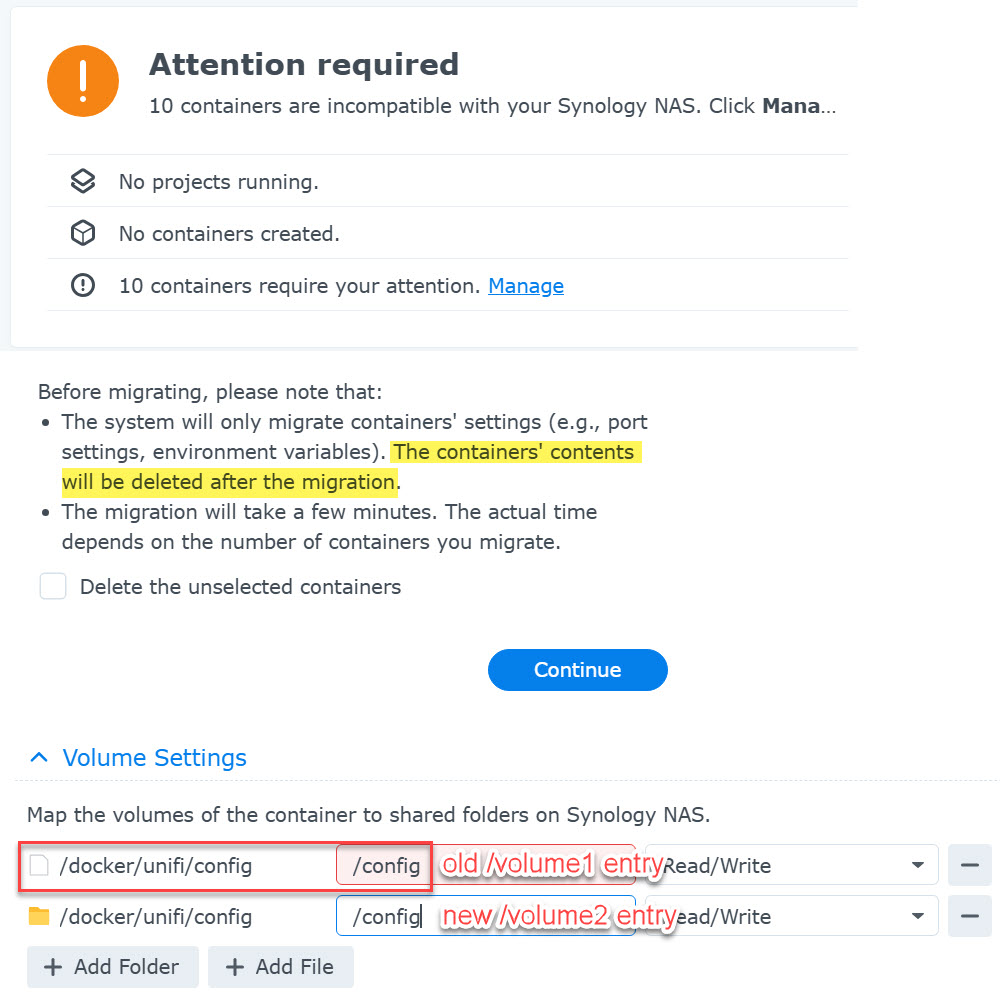

The two exceptions here were Container Manager (Docker), and IDrive. First, Container Manager complained that my containers were “incompatible” and needed to be “migrated” including a somewhat confusing/scary message that “the containers’ contents will be deleted after the migration.” This turned out to be a known issue, as the script author responded to my filed issue. All of my container data was in a shared folder, though, so I left the migration run and it seemed to work. I did have to make some changes to my dockerfile YAML to account for the new /volume2 path again, though. But all of my containers seemed to start up fine after that.

IDrive was a little weirder. The app was running, and my backup sets and preferences all seemed correct, but the file system shown in the app, which should have mirrored my shared folder setup, was empty. It wasn’t seeing any folders or files! I wondered if this was another /volume2 path issue, but could find no config files or settings to control this. I filed an issue in the Synology App Move repo and while trying to figure this out, I had a realization: IDrive saves files in a backup set with the full path, so everything in my current cloud backup for the NAS was pathed something like /volume1/share/folder/file.txt. Now that the path was /volume2/share/folder/file.txt the IDrive app would re-upload everything as new! That would double my storage in my IDrive account and probably take weeks. I sent in a ticket to IDrive support and they said they could make changes on the back end, but I really didn’t trust that.

What to do? After some thought, it was obvious: move everything back to /volume1.

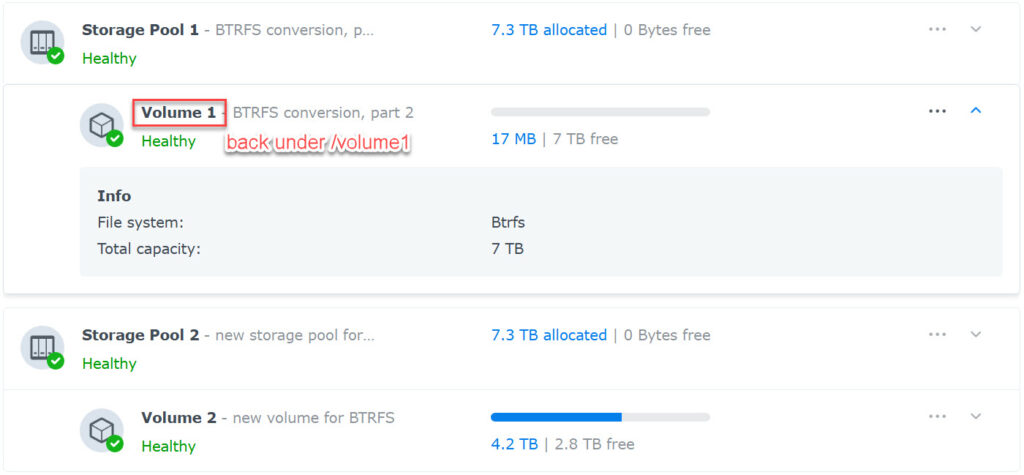

So yeah, I did it all again, but this time with Drive 1. Now that /volume1 was empty, I removed the ext4 volume and storage pool from Drive 1, which left Drive 1 as an available spare. I created a new storage pool and btrfs volume, which ended up back under /volume1 like I wanted.

Wash, rinse, repeat. It took another 8 hours or so to move all the shared folders on /volume2 back to /volume1 and then again I used the Synology App Mover script to move the packages back (including re-updating my scripts and Plex library paths). Once again, Container Manager and IDrive gave me headaches. This time one of the containers would not “migrate” and kept throwing a “failed to destroy btrfs snapshot” error. I found a similar issue in Github, which pointed me to a Docker cleanup script by the same author. Without doing enough research to understand its purpose, I ran the script which found and removed 148 (!!) btrfs subvolumes which ended up completely messing up all of my containers. Then when I tried to create new ones, Container Manager was throwing API errors (like this). Oops! I had to sudo rm -rf /volume1/@docker/image/btrfs and then I was able to use my YAML Docker compose files to re-build everything. Again, since all of my Docker volumes were on a shared folder, I didn’t lose any data. Whew! I probably should have just re-installed the app clean to begin with rather than messing with the mover script. One benefit of going through this, though, was that I ended up creating YAML Docker compose files for all of my containers and put them into projects instead of using the GUI in Container Manager.

Even though everything was back on /volume1, the IDrive app was still messed up, not seeing any files or folders. Since I had my config documented (screenshots and such), I ended up uninstalling the app, re-installing it, and setting up everything (including my backup sets) from scratch. This actually worked and the next backup run only picked up changes like it was supposed to, rather than re-uploading everything.

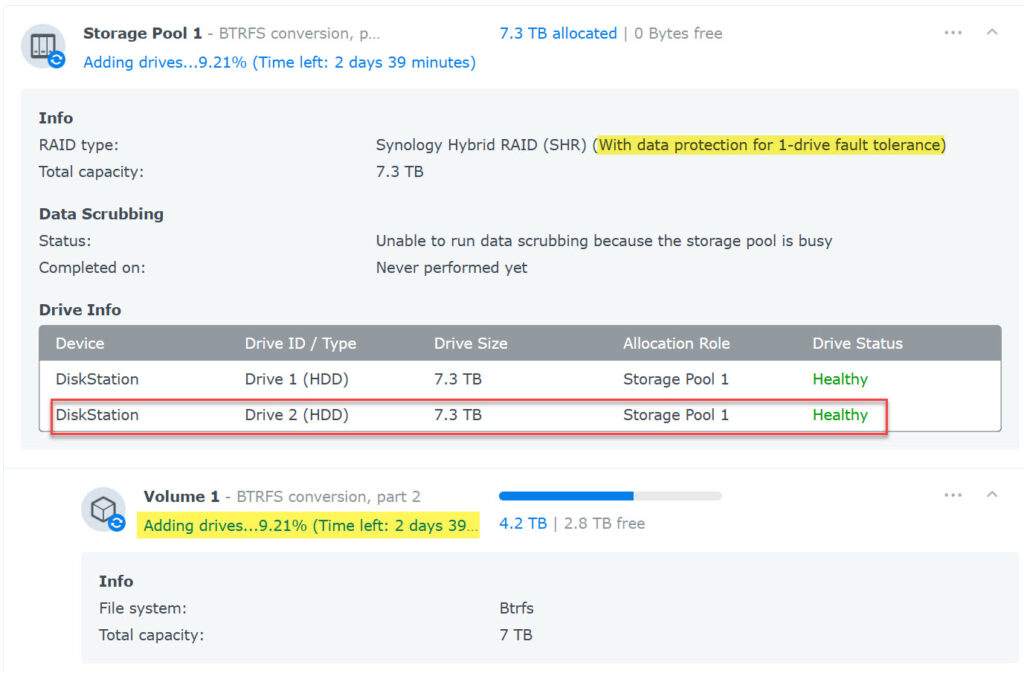

Finally everything was back up and running on a btrfs volume with the same /volume1 path as before. Now that /volume2 was empty, I removed it from Storage Manager, which freed up Drive 2 so I could add it back to /volume1 for RAID redundancy. The rebuild ran in the background and took about 3 days.

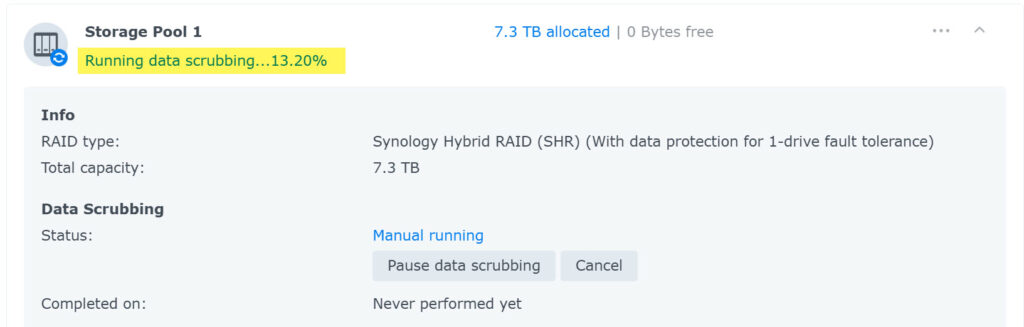

Now that I had a healthy RAID volume on btrfs, I enabled some of the additional health features like data scrubbing (which took about 6 hours), and space reclamation.

I said it wasn’t a short answer, didn’t I? Start-to-finish it took me about a week but I finally have converted my Synology NAS from ext4 to btrfs. At least I didn’t need that backup. 🙂